- Program

- Program

- Program

- Program

- Program

- Call For Papers

- Program

- Program

- Tickets

- Tickets

- Tickets

- Bootcamps

- Tickets

- Tickets

- Tickets

- Tickets

- Program

- Formate

- Inhouse

- Blog

- Info

- DevOpsCon

- Downloads

- Sponsors & Expo

- Sponsors & Expo

- Info

- Camps

- Editions

- Jetzt anmelden

- Register Now

- Register Now

- Register now

- Register Now

- Register now

- Register now

- Register Now

Scaling DevOps with Machine Intelligence

To integrate AI effectively, you need to understand how Transformer architectures and vector embeddings fundamentally shift your infrastructure from basic automation to deep LLM observability. This paper walks you through those concepts, explaining how to deploy GenAI in serverless environments and teaching you when to engineer solutions beyond standard RAG or fine-tuning.

Table of Contents

-

- Generative AI and Observability in the Serverless World

- Transformer and Generative AI Concepts

- Going Beyond RAG and Fine-Tuning

- MLOps: Bridging the Gap Between ML and DevOps

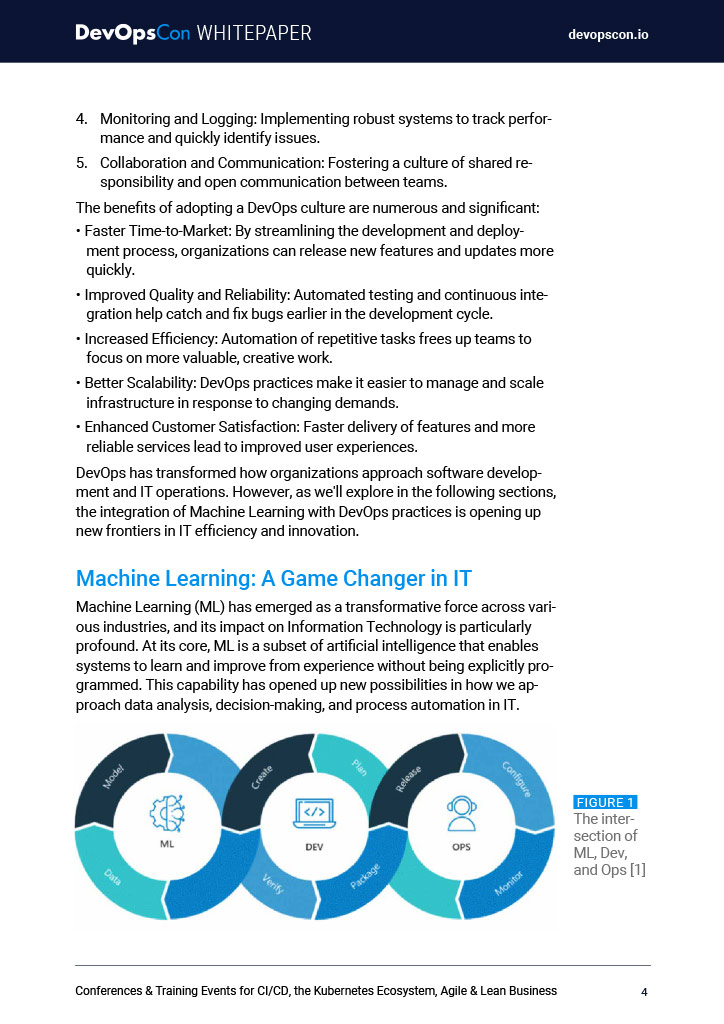

- Machine Learning: A Game Changer in IT

- Conclusion

Free Membership

Stay ahead in DevOps, Kubernetes and CI/CD – without the doomscrolling.

Curated articles, deep dives, and live experts. Delivered, not hunted.

Email

No spam · Unsubscribe anytime

Plus: Conference & Camp early birds and discounts

Free Membership

Stay ahead in DevOps, Kubernetes and CI/CD – without the doomscrolling.

Curated articles, deep dives, and live experts. Delivered, not hunted.

Email

No spam · Unsubscribe anytime

Plus: Conference & Camp early birds and discounts