STAY TUNED

Learn more about DevOpsCon

Introduction

I intend this blog post to reveal my experiences with using different AI tools for Infrastructure as Code. Why did I choose this combination in particular?

I am a Cloud Adoption Engineer. I have years of experience as a software developer. My specialties are CI/CD and container technology. I’m part of a team responsible for enabling our company’s transformation toward Software as a Service (SaaS).

Therefore, I’ve got to do a lot of investigations, learn about best practices, and (of course) to implement them. One key part of making this change is to provide and automate cloud resources. We can do this using Infrastructure as Code (Iac).

We want to create safe and productive environments for our customers. To do this, we offer special tools called Infrastructure as Code. These tools are part of our platform service. Every employee can use them if they want to work with cloud services.

Therefore, I’m focusing on Infrastructure as Code, or to be more precise, on Terraform. Many companies try to accelerate their development using AI, and so do we.

I use GitHub Copilot everyday, but not exclusively to develop Terraform modules. In my case, the preferred cloud platform is Microsoft Azure.

AI enables us to speed up development and there are now many opportunities to do so. I’m always excited about new approaches and nowadays, you can’t avoid topics regarding AI. The possibilities also come with an obligation to use it correctly and to verify the suggested solutions. In this post, I’ll reveal how I’m working with AI tools regarding Infrastructure as Code.

What is Infrastructure as Code?

Instead of manually configuring resources through clicks in the Azure Portal, Infrastructure as Code (IaC) allows you to define and deploy cloud resources using definition files. These files describe the infrastructure in code, streamlining the process and ensuring consistency.

In IaC, infrastructure management is treated similarly to software development, where configuration scripts and files are written, version-controlled, and automated.

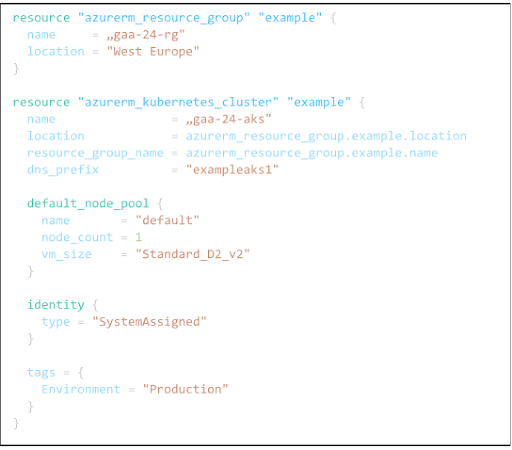

For example, with HashiCorp’s Terraform, you can write code to create an Azure Resource Group and an Azure Kubernetes Cluster.

In this case, an Azure Kubernetes Cluster, named “gaa-24-aks,” is defined with a default node pool containing one node. This cluster is part of the resource group “gaa-24-rg” and is deployed in the West Europe region.

Although the example lacks a specific Terraform block to configure the Terraform setup itself, it highlights how Terraform configurations can include multiple “.tf” files or be consolidated into a single file.

The advantages of using Infrastructure as Code to automate cloud resource management are clear: the code can be version-controlled, resources are reproducible, and the process saves significant time by eliminating manual configuration. This makes IaC an essential practice for efficiently managing cloud infrastructure.

Example usages of AI tools Infrastructure as Code

AI tools can be used in the following tasks:

- Answering questions

- Code generation

- Security

- Code interpretation

For these tasks, I’ll use three different AI tools:

- GitHub Copilot

- Microsoft Copilot

- Gemini

- AzureDevOps

Beforehand: I’m focusing on the combination of GitHub Copilot and Terraform.

Answering questions

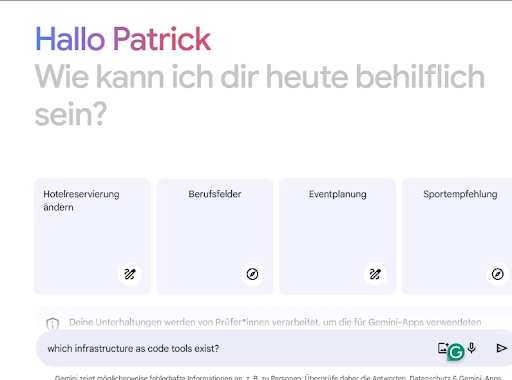

Before I can explore my implementation of Infrastructure as Code, I need to have a few questions answered. I start with a general and simple question for Gemini. The question is:

“Which infrastructure as code tools exist?”

The first result listed Terraform and Pulumi as universal tools. In addition, Gemini also lists cloud-specific tools.:

The question was broad, so a general answer is acceptable. I appreciate the brief yet informative explanations given by Gemini.

For the next use cases, I’ll stick to Terraform and according to the AI tool, I’ll use Microsoft’s Copilot.

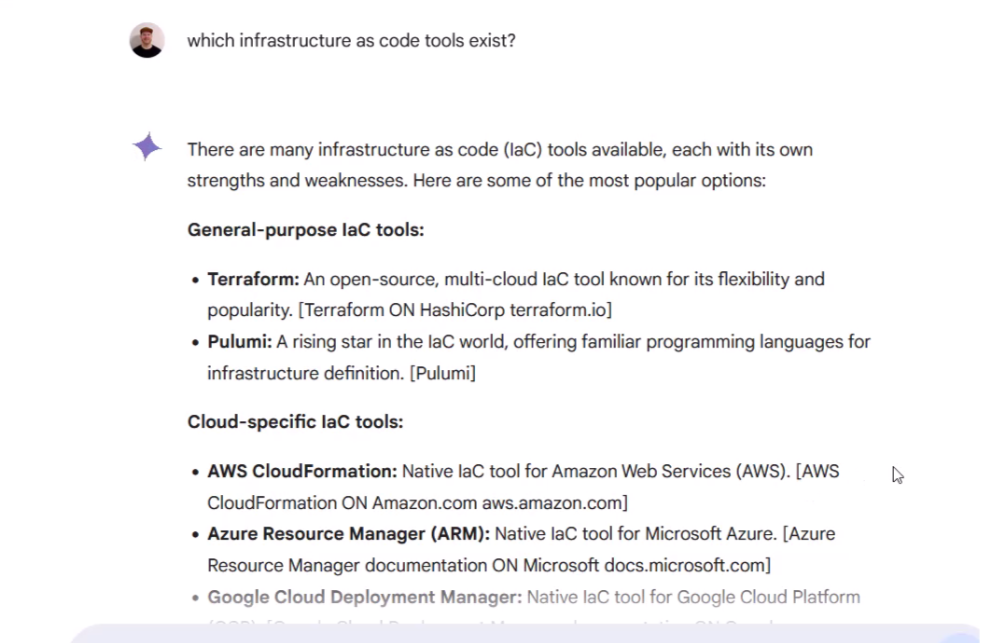

I want detailed information on starting Infrastructure as Code using Terraform.

The question for that is:

“Should I use GitHub Copilot or Microsoft Copilot if I’d like to work with Terraform?”

Here I get a clear answer to use GitHub Copilot, when I intend to start coding:

This fits perfectly for the next usage: Code generation. So far we’ve just been asking general questions to gain some know-how upfront, but now let’s consider some more advanced usages.

Code Generation

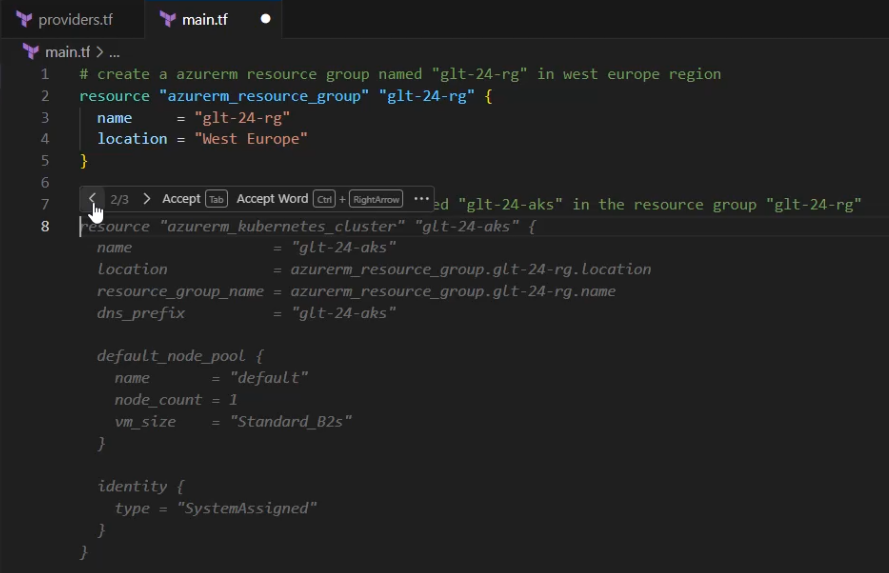

I’ll use Visual Studio Code as IDE for that usage. The goal is to create a Terraform configuration that can deploy an Azure Kubernetes Cluster.

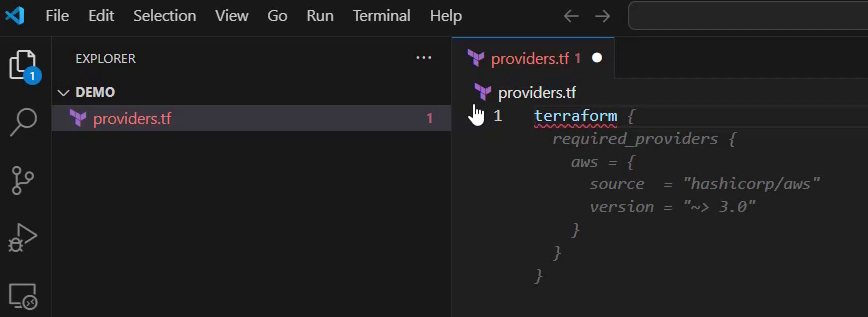

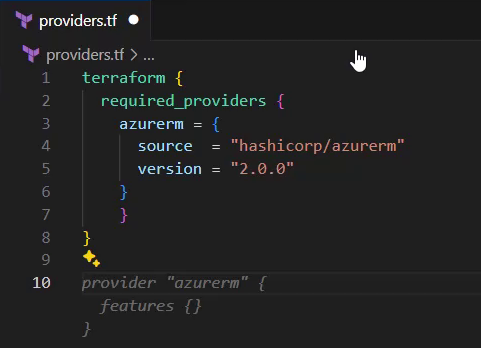

Starting from scratch and creating a file named “providers.tf”. I type the word “terraform” first, GitHub Copilot quickly gives a suggestion, it can be seen in the gray text below.

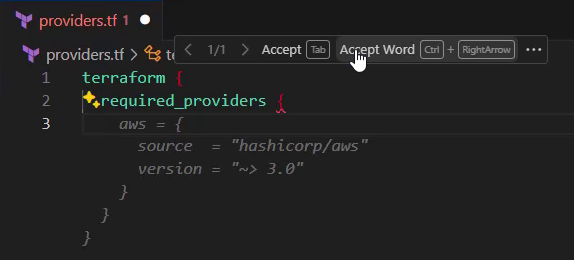

If that would fit perfectly, I could accept the suggestion by hitting the tab key. Unfortunately, the suggestion doesn’t include an azurerm provider, therefore I must click “Accept Word” a few times and after hovering over the block, until I get to the line starting with aws:

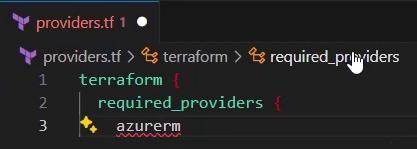

I remove aws and replace it with azurerm, as I would like to provision resources on Azure:

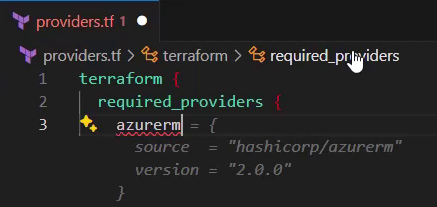

The GitHub Copilot again provides me with a next suggestion:

That includes the desired configuration for the Terraform provider for Azure Resource Manager, and I accept it by hitting the tab key to complete the Terraform block.

After pressing the enter key to move to a new line, I already get the next suggestion (see the grayed text in the picture below):

It is about the provider block starting in line 10. This already fits – as the provider block configures the azurerm provider – and I accept it again by hitting the tab key:

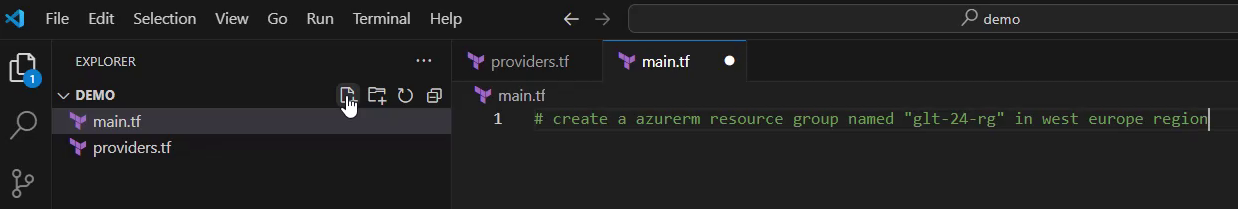

I’m done with the “providers.tf” file and I’ll create a new file, in which I want to define the proper resource blocks for the Azure Kubernetes Cluster. The file including the resource blocks will be named “main.tf”:

I’m going to use a different approach to get the code generated: instead of entering keywords, I’ll add a comment, in which I describe the purpose. I provide the following comment, starting with a hashtag: “create a azurerm resource group named “glt-24-rg” in west europe region”:

After completing the comment, in which I request to create a resource group named “glt-24-rg” in the region “West Europe”, and hitting the enter key, I get the following suggestion (see the greyed text in the picture below):

The GitHub Copilot provides me with the correct and desired resource block, which defines an azurerm resource group.

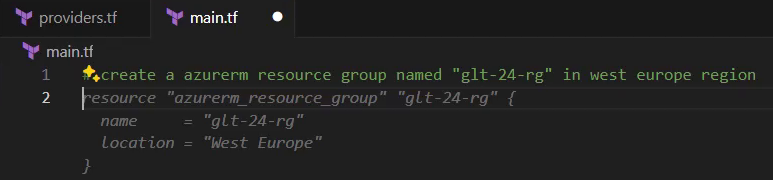

Next, I want to finally add the desired resource block for the Azure Kubernetes Cluster.

So, I’ll create a new comment, in which I request an Azure Kubernetes Cluster named “glt-24-aks”, which should belong to the previously created resource group “glt-24-rg”.

The content of the comment, again starting with a hashtag:

“Create ab azure Kubernetes cluster named „glt-24-aks in the resource group “glt-24-rg”

I select the second option (of 3 possible suggestions) for this resource block, and starting at line 8 the desired resource block appears:

For now, I finished the Terraform configuration with the support of the AI pair programming tool – the GitHub Copilot. Now I want to prove whether this works as expected, therefore I want to provision the resources on Azure.

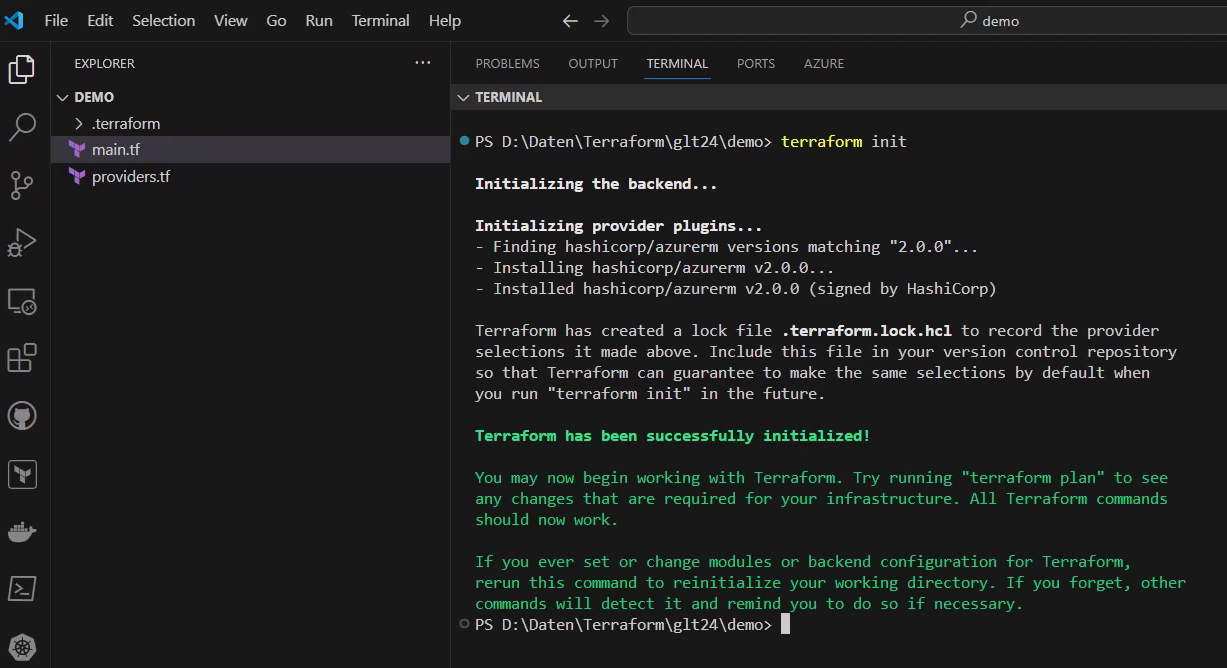

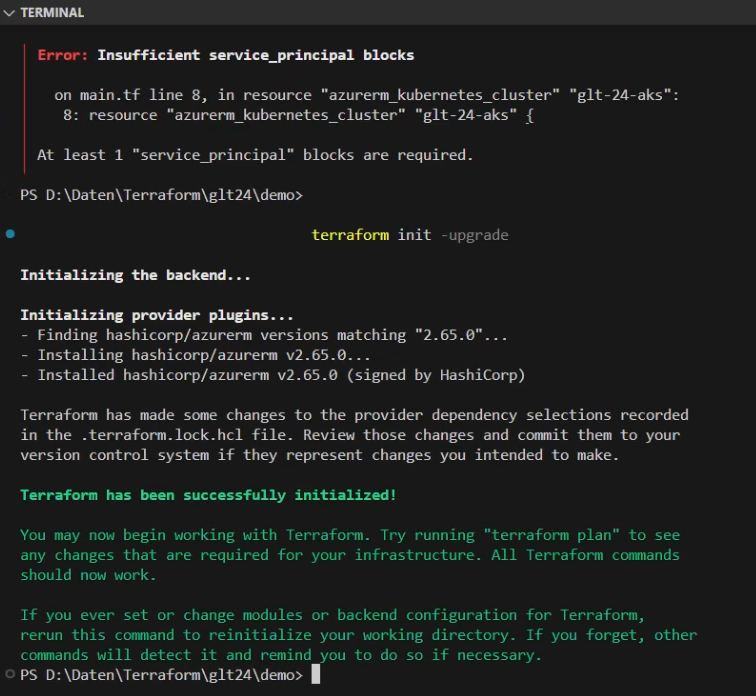

This means triggering the dedicated Terraform commands, so I’ll start with “terraform init”:

That’s the first command I have to use, which among other things downloads the mandatory provider plugin.

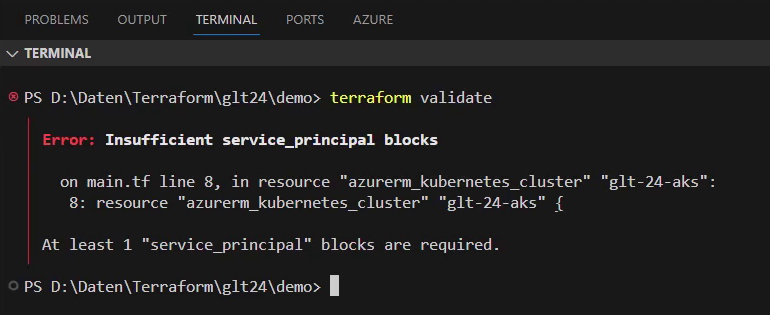

I continue with the “terraform validate” command, as the name suggests, it’s about validating the Terraform configuration. Unfortunately, an error appears:

It seems that I’m missing a dedicated block related to a service principal. I have two options: either following the error message and creating the proposed block, or I review the whole Terraform configuration again. I decided to go with the second option.

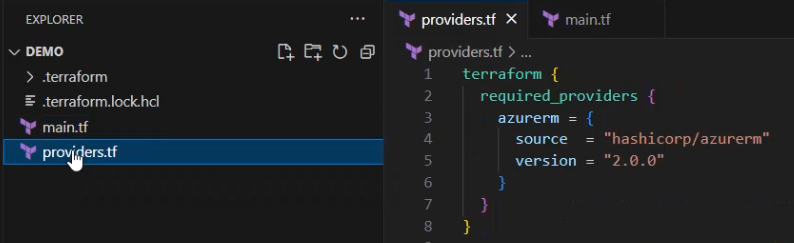

After verifying the content of the file “providers.tf”, I accepted “2.0.0” as a version for the required provider:

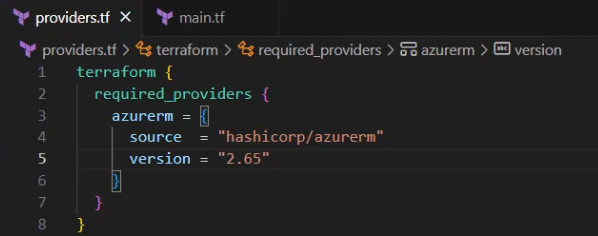

That’s fine and valid, unfortunately the currently created resource block is not compatible with that version. After doing some quick research, I discover that a higher version would solve my problem:

So, I’ll replace “2.0.0” with “2.65” and I’ll save the file.

Now I must initialize my configuration again: the command “terraform init -upgrade” will be the proper command:

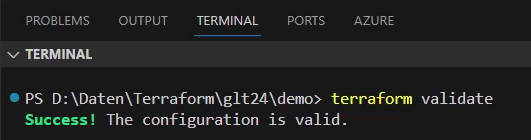

Now, the “terraform validate” command doesn’t reveal an error message:

After that, I want to ensure that the Terraform configuration fits to the Terraform language style convention – for that, the command “terraform fmt” can be used. It will report the file, in which deviations of the style conventions were recognized. The Terraform configuration applies to the convention, if there are no logs.

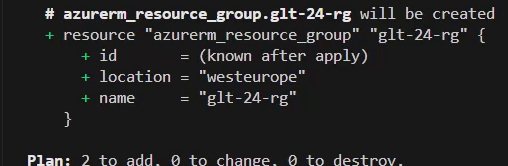

Next, I’m going to apply the “terraform plan” command, which creates and saves an execution plan as a file “tfplan”. The execution plan provides a summary of all necessary actions.

In my case: it’s about provisioning two different resources: the resource group and the Azure Kubernetes Cluster.

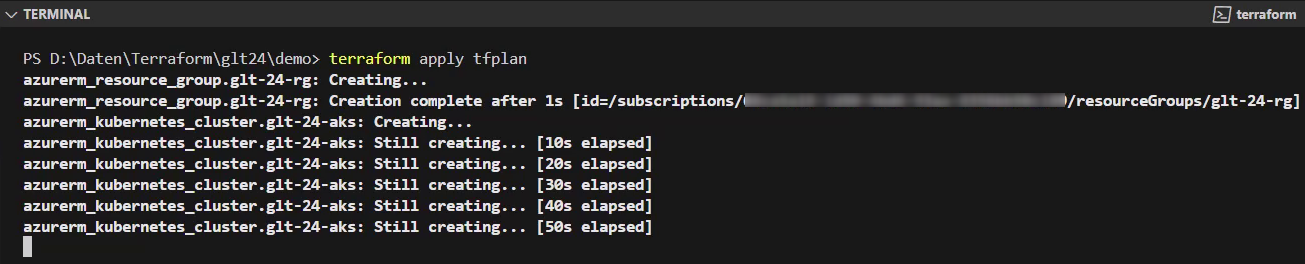

Finally, I’ll execute the command, which triggers the deployment of the Azure resources. The last command is “terraform apply tfplan”:

After triggering it, I can observe the progress of the provisioning of the resources.

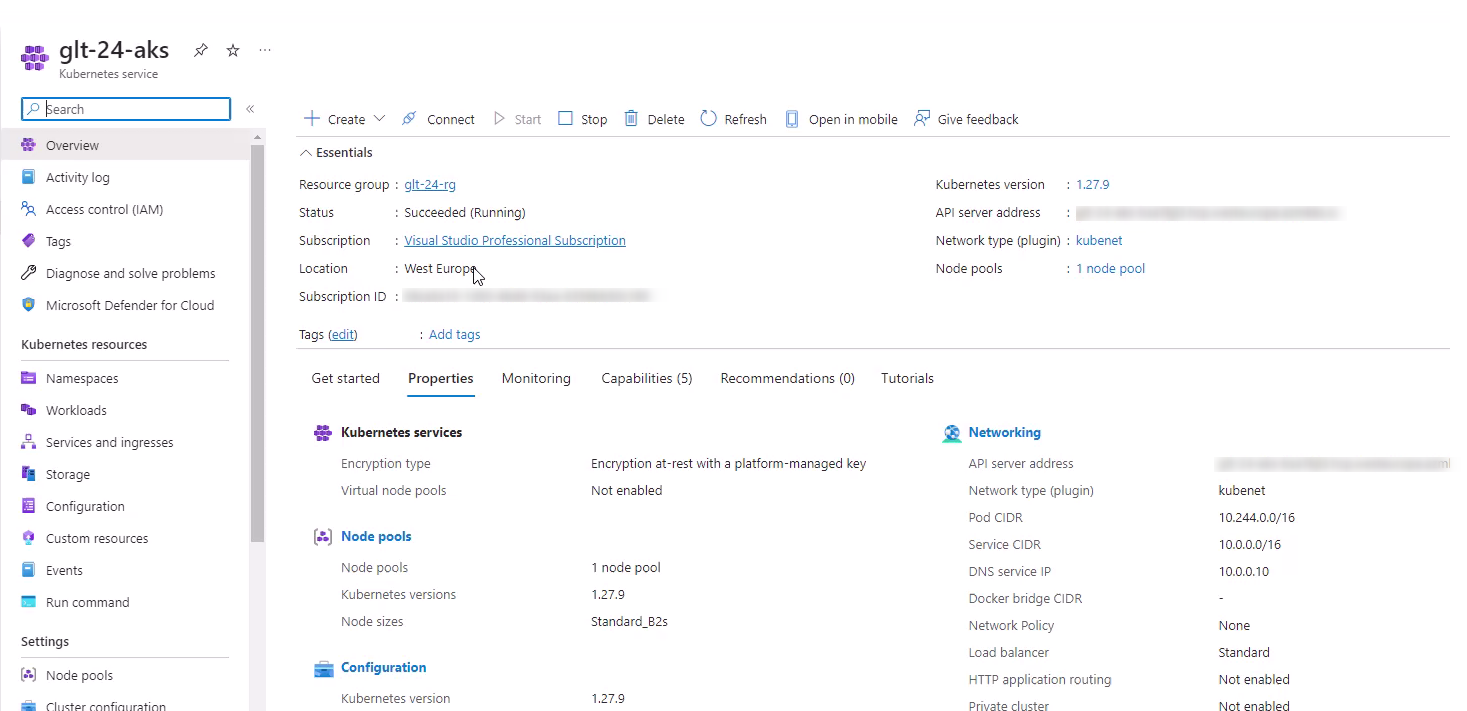

It takes a few minutes until the last command is completed. I’ll verify that also by switching to Azure Portal: I can recognize here that the Azure Kubernetes Cluster is ready:

So, finally the Azure Kubernetes Cluster could be successfully deployed, and the derived suggestions from GitHub Copilot worked well. Of course, the AI pair programming tool couldn’t guess in the beginning which version would fit best in the end.

Security

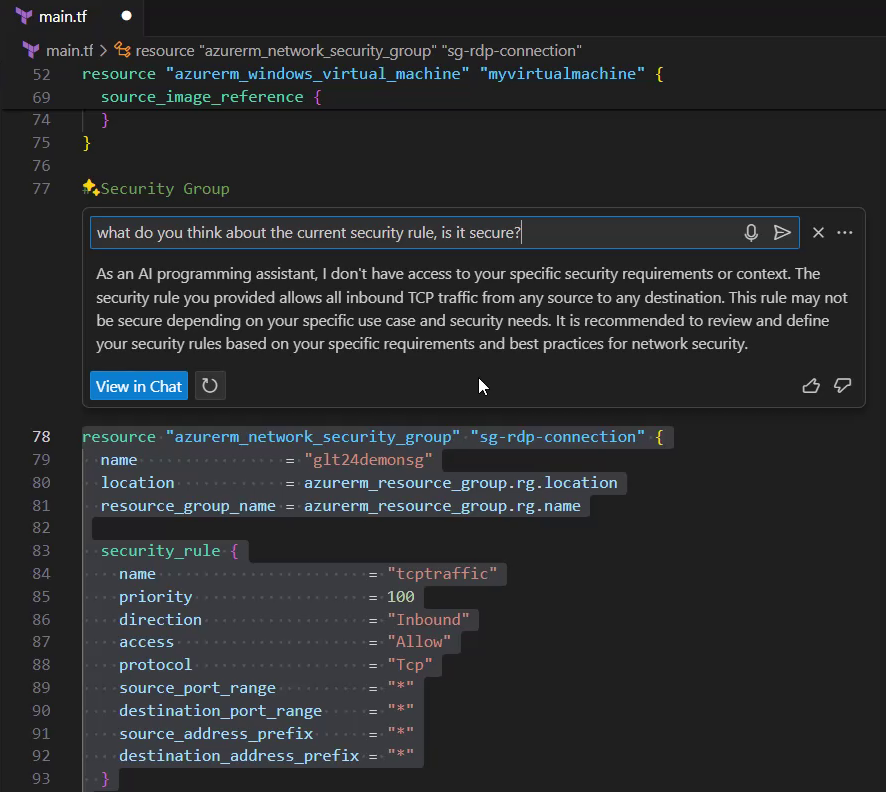

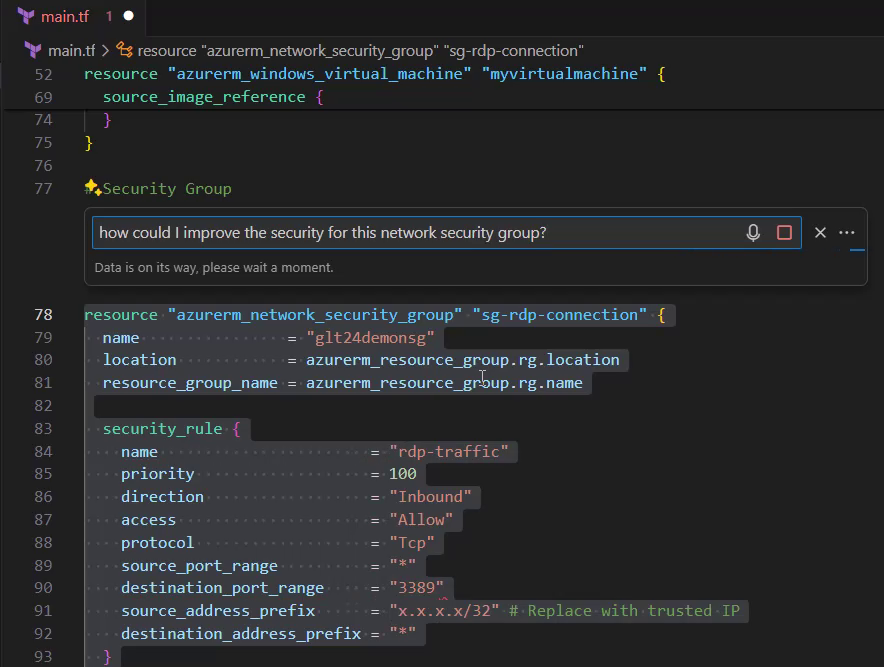

Imagine you have to review the following code, what catches your eye? Of course, having many Asterisks could be suspicious – and it is.

I’ll ask the following question to GitHub Copilot a general question:

“What do you think about the current security rule, is it secure?”

Of course, there is too little context, the AI pair programming tool doesn’t know the concrete purpose of that security rule.

Therefore, I’ll try it by providing a new question:

“How could I improve the security for this network security group?”

GitHub Copilot suggests as a result to open port 3389 only, for the remote desktop connection, which would fit in that context.

Although I provided general questions, both answers were meaningful, but I think it could be even better.

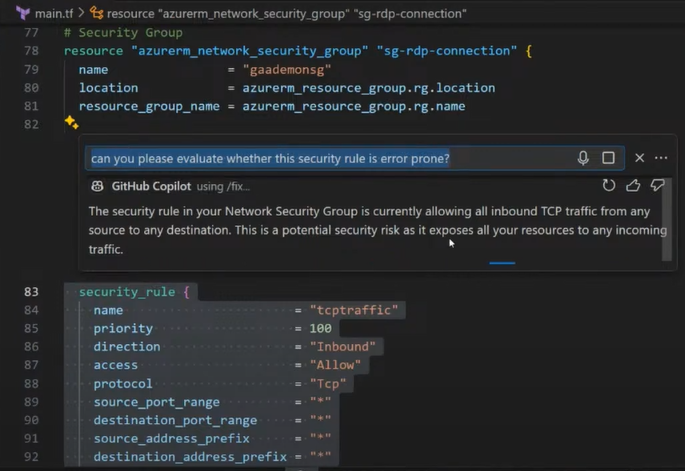

I’ll try again with a generic question:

“Can you please evaluate whether this security rule is error prone?”

Now I got the expected answer:

I’m allowing all inbound TCP traffic from any source to any destination, and that’s of course not a good idea!

Finally, after a few tries, I got the hint that this security rule could be a potential risk.

Code Interpretation

As the next use case, I want to know, whether AI tools can interpret an arbitrarily chosen source code, as seen in the snippet below:

This time, I’ll choose Gemini to give me advice. I’ll insert the above’s code snippet after providing the following question:

“Can you please explain the following code to me?”

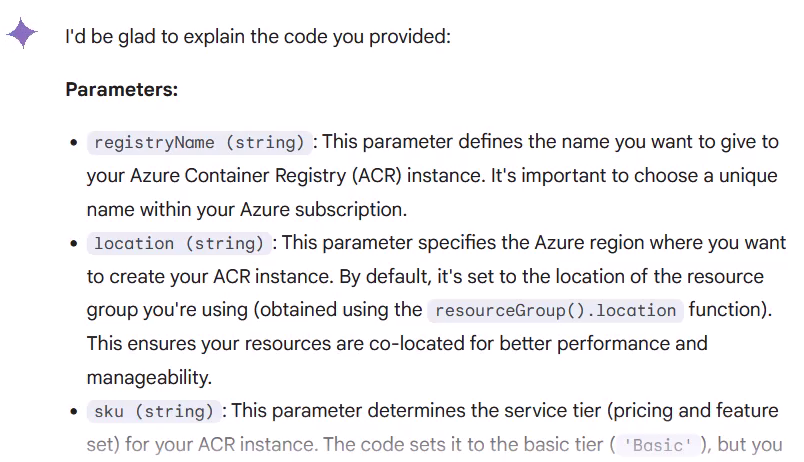

As a result, I get a detailed description of the code: what the purpose is and what the parameters are used for:

but I was looking for the following summary, which provides me a meaningful explanation of it:

So, Gemini provided a detailed explanation but, also in addition a brief conclusion, which fits.

While using AI tools the thing that comes to your mind is: Are my prompts retained?

Let’s consider the official statements of GitHub and Microsoft for that:

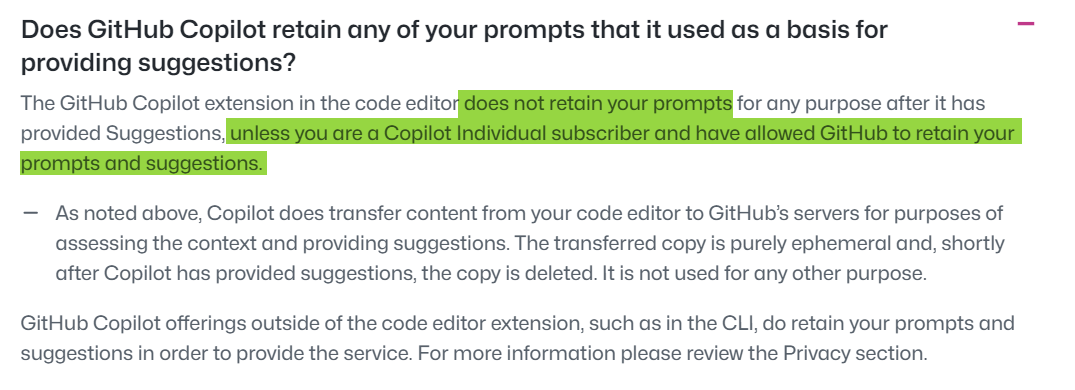

According to the usage of the GitHub Copilot, there is no retention of the prompts, except if you are a “Copilot Individual subscriber” and if you have allowed permissions for that:

What about the Microsoft Copilot?

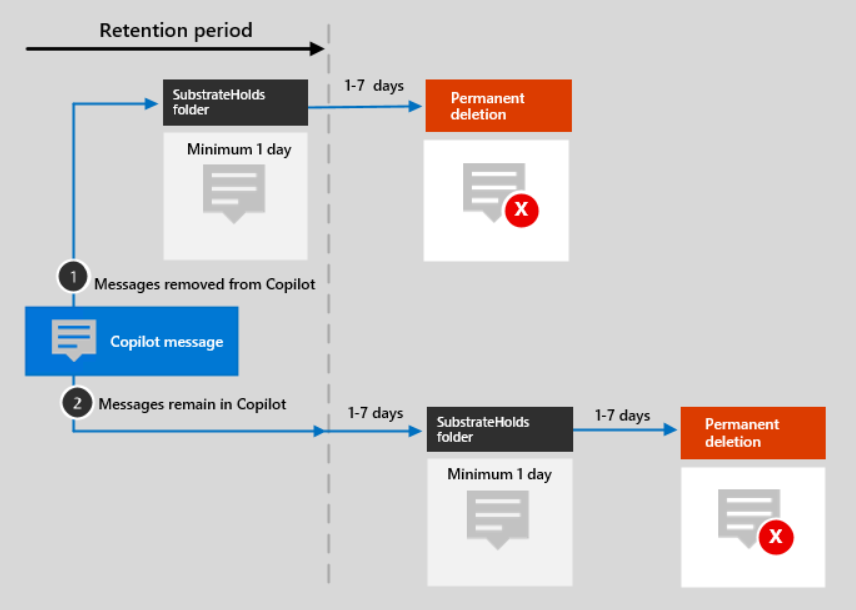

Microsoft provides transparent information about the retention policy:

Conclusion

Using AI tools for Infrastructure as Code works well and speeds up the development. General questions are answered fast with meaningful results. As a software developer, the code generation in an IDE like Visual Studio Code is very interesting.

Using GitHub Copilot you get suggestions by simply starting coding or by providing comments. One key aspect in my humble opinion, which defines the quality of the suggestion is, is to provide the proper context. In my case, after reviewing my approach, I would raise among other things the following questions:

- What should the AI tool know about the purpose of the security rule?

- How can the AI tool know which version of the provider is the best?

Therefore, you should always keep in mind that the AI tools will help you to generate code, to review code and to interpret it for you. You should have the “big picture” in your mind of what you are going to achieve.