A few months ago, I was honored to receive an invitation to speak at DevOPSCon, Munich. I delivered a session on architectural decisions for companies building a Kubernetes platform. Having spent time with strategic companies adopting Kubernetes, I’ve been able to spot trends, and I am keen to share these learnings with anyone building a Kubernetes platform. I had many interesting ‘corridor’ discussions after my session (seemed like I touched a raw nerve), and this led me to write this article series.

You can find the first part of this series here: Decisions: Multi-Tenancy, Purpose-Built Operating System

Opinions expressed in this article are solely my own and do not express the views or opinions of my employer.

The challenge(s)

So, you decided to run your containerised applications on Kubernetes, because everyone seems to be doing it these days. Day 0 looks fantastic. You deploy, scale, and observe. Then Day 1 and Day 2 arrive. Day 3 is around the corner. You suddenly notice that Kubernetes is a microcosmos. You are challenged to make decisions. How many clusters should I create? Which tenancy model should I use? How should I encrypt service-to-service communication?

Decision #3, how many clusters?

Should you have a large number of small-scale clusters or a small number of large-scale clusters? Without getting too deep into the Kubernetes scalability envelope, the following diagram is a good start.

Possible decision model to follow:

- Step 1 – Analyze constraints: untrusted tenants, organizational boundaries, areas of impact concerns

- Step 2 – Optimize within constraints: Fewer cluster leads to more efficient bin packing and use of resources but will increase the blast radius

STAY TUNED

Learn more about DevOpsCon

Conclusions

- Any production workload should be isolated from any non-production workload. This should be hard isolation (separated network boundaries, separated clusters).

- For each cluster, try to define the business deployment unit while you analyze the constraints (i.e., cluster A will host 5 customers, 10 node group, 10 VMs per node group)

- Tooling for creating multiple clusters is the new norm (Terraform, CrossPlane, cloud provider-specific IaC tools). If you opt in for multiple clusters, I recommend defining a clear automation/deployment methodology (tooling, organizational policy)

Decision #4, authentication & encryption

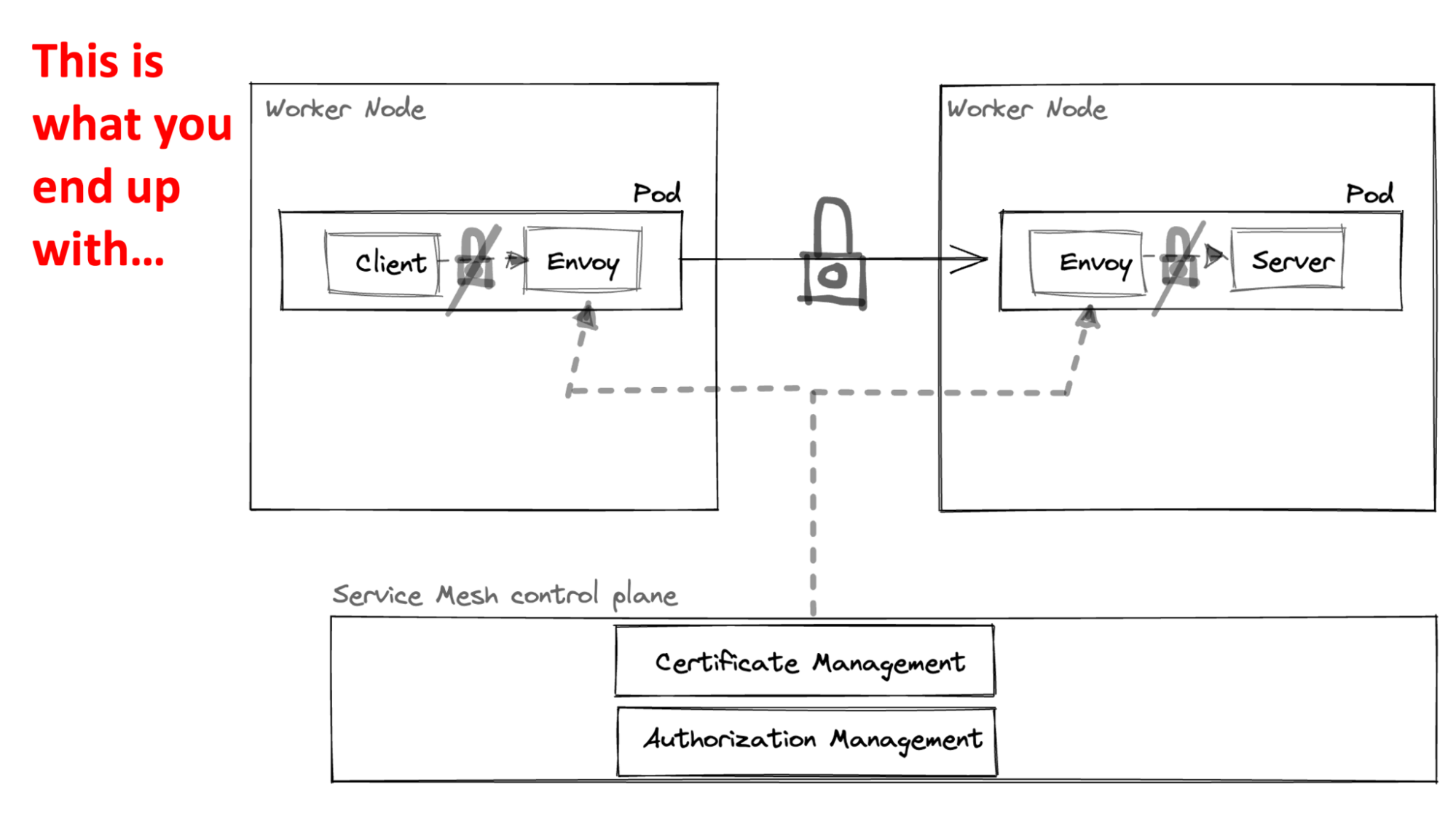

In sensitive zero-trust environments, Pod-to-Pod encryption is very often a mandatory requirement, largely implemented using mTLS. Implementing your own mTLS infrastructure is complex, very often you will implement a service mesh such as Istio or linkerd to achieve this capability.

Implementing a service mesh will have a steep learning curve and additional operational overhead for you and your teams, this needs to be taken into consideration. Going the service mesh route, if successful, will allow you to democratize the application networking by offloading communication construct to the mesh data plane, essentially your developers will only care about business logic. mTLS will then be a foundational feature inside a set of rich features: circuit breakers, smart routing, automatic retries, and deep Observability(o11y) capabilities.

The following diagram depicts the dilemma.

So, you end up with a full-blown service mesh control plane and data plane. To be clear, it is not an anti-pattern to implement a service mesh just for mTLS. Truth be told, I have yet to observe a proper non-service mesh mTLS solution that will make your life easier. By that, I mean that both Istio and Linkerd will include mTLS as a flag, taking care of all the heavy-lifting parts. mTLS is hard, from key generation/auto-rotation and data-plane proxies’ integration to creating and associating identities. You get the idea that proper mTLS has many moving parts to take care of at installation and mainly at runtime.

However, if mTLS is not a hard requirement in your case but rather encryption, I encourage you to look into alternative options. Here is one of them:

Cilium and WireGuard can be integrated to create a pod-to-pod transparent in-transit encryption (network-layer). This is by far one of the fastest routes I have ever experienced to set up pod-to-pod encryption.

WireGuard was described as a “work of art” by Linus(2018), and it is considered a performant and lightweight secure Virtual Private Network (VPN) solution built into the Linux kernel. One of the main advantages is that the operator does not need to manage a Public Key Infrastructure (PKI). Each node in this ‘overlay’ will generate its own keys, encrypting and decrypting traffic transparently.

Another transparent option that I am familiar with is using a cloud provider that offers VMs that are protected by hardware encryption primitives. Those normally transparently encrypt all ‘on-wire’ node-to-node traffic; this is by far the most performant and frictionless option I’ve seen.

Edit | Quick Edit | Trash | View | Purge from cache

The above being said, network-layer encryption (by itself) is not considered zero-trust. Why? Network-layer encryption does not provide application/workload-level identities. WireGuard identity sits on the network layer (Node), and we all know that, in our new world, nodes are an ephemeral multi-app construct. mTLS (when backed with a PKI and identity management such as SPIFFE or Linkerd k8s service account integration) brings the identity into the workload level. Hence, the identity is application aware.

I have also seen companies that use both practices, network-layer level encryption and mTLS service-to-service encryption, as a defense-in-depth strategy. mTLS will do a very good job for microservice-to-microservice communication patterns. However, if you are operating k8s clusters, you are aware that a significant burden comes from operational add-ons, those add-ons (take CoreDNS as an example) traffic will not be part of your mTLS ‘overlay’. While you can strive to add TLS support for those operators (DNS over TLS in the case of CoreDNS), managing the lifecycle for those operators (PKI) will be outside the scope of the service mesh. This will result in higher operational costs.

Conclusions

- If zero-trust and mTLS are strict requirements, then a service mesh with an mTLS infrastructure might seem overkill. On the other hand, it will be cumbersome to manage mTLS outside the scope of a service mesh. Some cloud providers are working to introduce technologies/services which aim to (eventually) abstract those operational complexities.

- There is no such thing as a “silver bullet,” and mTLS will not be your ultimate solution for achieving zero-trust requirements. Your plan should start with a defense-in-depth model and then work backwards to identify the technologies that will help you in implementing the model, one of which is mTLS.

- It is an anti-pattern to weaken zero-trust hard requirements in order to meet operational constraints; it is a policy that drives architecture and tools, not the other way around.

- As transparent encryption (network-layer) is not workload aware, often it might not support encryption for all pod-to-pod communication patterns. Validate that the encryption patterns you expect are supported.

Kubernetes Training (German only)

Entdecke die Kubernetes Trainings für Einsteiger und Fortgeschrittene mit DevOps-Profi Erkan Yanar

To sum up

This article outlines several decisions that businesses must consider when adopting a Kubernetes platform. Number of clusters, Zero-Trust, mTLS are decisions which are not easily reversible therefore should not be scope limited to the Architect/SRE/Platform Teams. I would go up to product/sales, which will help in better defining the roadmap/customer on- boarding, sales-cycles and the CISO which will define the Zero-Trust security policy. What comes next? Keep an eye out for the next part of this article series.

Opinions expressed in this article are solely my own and do not express the views or opinions of my employer.