So what exactly is a multi-cloud? A multi-cloud involves the use of several multiple cloud providers / cloud platforms, while providing the user with the feeling that it is a single cloud. For the most part, people try to advance to this evolutionary stage of cloud computing to achieve independence from individual cloud providers.

The use of multiple cloud providers boosts resilience and availability, and of course enables the use of technologies that individual cloud providers do not provide. As an example, deploying your Alexa Skill in the Cloud is relatively difficult if you’ve decided to use Microsoft Azure as your provider. In addition, a multi-cloud solution gives us the ability to host applications with high requirements in terms of processing power, storage and network performance with a cloud provider that meets these needs. In turn, less critical applications can be hosted by a lower-cost provider to reduce IT costs.

Naturally, the multi-cloud framework is not just all benefit. By using multiple cloud providers, the design of your infrastructure becomes much more complex and more difficult to manage. The number of error sources can increase and the administration of billing for each individual cloud providers becomes more complex.

You should compare both the advantages and disadvantages before you make a decision. If you determine that you don’t really need to be afraid of being dependent on a single cloud provider, you should rather invest the time in the use of the cloud services.

What is Function-as-a-Service?

In 2014, the Function-as-a-Service (FaaS) concept first appeared on the market. At that time, the hook.io concept was introduced. In the years that followed, all the major players in IT jumped on the bandwagon, with products such as AWS Lambda, Google Cloud Functions, IBM OpenWhisk or even Microsoft Azure Functions. The characteristics of such a function are as follows:

- The server, network, operating system, storage, etc. are abstracted by the developer

- Billing is based on usage with accuracy to the second

- FaaS is stateless, meaning that a database or file system is needed to store data or states.

- It is highly scalable

Yet what advantages does the entire package offer? Probably the biggest advantage is that the developer no longer has to worry about the infrastructure, but only needs to address individual functions. The services are highly scalable, enabling accurate and usage-based billing. This allows you to achieve maximum transparency in terms of product costs. The logic of the application can be divided into individual functions, providing considerably more flexibility for the implementation of other requirements. The functions can be used in a variety of scenarios. These often include:

- Web requests

- Scheduled jobs and tasks

- Events

- Manually started tasks

FaaS with AWS Lambda

As a first step, we will create a new Maven project. To be able to use the AWS Lambda-specific functionalities, we need to add the dependency seen in Listing 1 to our project.

Listing 1: Core Dependency for AWS Lambda

<dependency> <groupId>com.amazonaws</groupId> <artifactId>aws-lambda-java-core</artifactId> <version>1.2.0</version> </dependency>

The next step is to implement a handler that takes the request and returns a response to the caller. There are two such handlers in the Core Dependency: the RequestHandler and the RequestStreamHandler. We will use RequestHandler in our sample project and declare inbound and outbound as a string (Listing 2).

Listing 2: Handler class for requests

public class LambdaMethodHandler implements RequestHandler<String, String>

{

public String handleRequest(String input, Context context) {

context.getLogger().log("Input: " + input);

return "Hello World " + input;

}

}

If you then execute a Maven build, a .jar file will be created, which can then be deployed at a later time. Already at this point you can clearly see that the LambdaDependency creates permanent wiring to AWS. As I had already mentioned at the beginning, this is not necessarily a bad thing, but the decision should be made consciously. If you now want to use the function to operate an AWS-specific database, this coupling grows even stronger.

Deployment of the function: There are several ways to deploy a Lambda feature, either manually through the AWS console or automatically through a CI server. For this sample project, the automated path was chosen using Travis CI.

Travis is a cloud-based CI server that supports different languages and target platforms. The great advantage of Travis is that it can be connected to your own GitHub account within a matter of seconds. What do we need to do this? Well, Travis is configured through a file called .travis.yaml. In our case, we need Maven for the build as well as for the connection to our AWS-hosted Lambda function for the deployment.

As you can see from the configuration in Listing 3, you need the following configuration parameters for a successful deployment:

- Provider: In Travis, this is the destination provider to deploy to in the deploy step

- Runtime: The runtime needed to execute the deployment

- Handler name: Here we have to specify our request handler, including package, class and method names

- Amazon Credentials: Last but not least, we need to enter the credentials so that the build can deploy the function. This can be done by using the following commands:

- AccessKey: travis encrypt “Your AccessKey” –add deploy.access_key

- Secret_AccessKey: travis encrypt “Your SecretAccessKey” –add deploy.secret_access_key

- If these credentials are not passed through the configuration, Travis looks for the environment variables AWS_ACCESS_KEY and AWS_SECRET_ACCESS_KEY

Listing 3: Configuration of “travis.yml”

language: java

jdk:

- openjdk8

script: mvn clean install

deploy:

provider: lambda

function_name: SimpleFunction

region: eu-central-1

role: arn:aws:iam::001843237652:role/lambda_basic_execution

runtime: java8

andler_name: de.developerpat.handler.LambdaMethodHandler::handleRequest

access_key_id:

secure: {Your_Access_Key}

secret_access_key:

secure: {Your Secret Access Key}

Interim conclusion: The effort needed to provide a Lambda function is relatively low. The interfaces to the Lambda implementation are clear and easy to understand. Because AWS provides Lambda Runtime as SaaS, we don’t have to worry about installation and configuration, and on top of that, we receive certain services right out of the box (such as logging and monitoring). Of course, this implies a very strong connection to AWS. So if for example, you want to switch to Microsoft Azure or to Google Cloud for some reason, you need to migrate the feature and adjust it accordingly in your code.

FaaS with Knative

The open-source Knative project was launched in 2018. The founding fathers were Google and Pivotal, but all the IT celebrities such as IBM and Red Hat have since joined the game. The Knative framework is based on Kubernetes and Istio, which provide the application environment (container-based) and advanced network routing. Knative expands Kubernetes with a suite of middleware components essential for designing modern container-based applications. Since Knative is based on Kubernetes, it is possible to host the applications locally, in the cloud or in a third-party data center.

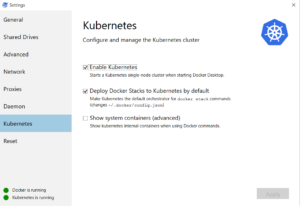

Pre-Steps: Whether it’s AWS, Microsoft, IBM or Google – nowadays every big cloud provider offers a “managed Kubernetes”. For test purposes, you can also simply use a local MiniCube or Minishift. For my use case, I use the MiniCube supplied with Docker for Windows, which can be easily activated via the Docker UI (Fig. 1).

Unfortunately, the standard MiniCube alone does not give us anything. So how do we install Knative now? Before we can install Knative, we need Istio first. There are two ways to install Istio and Knative – an automated method and a manual one.

Individual installation steps for different cloud providers are provided in the Knative documentation. It should be noted here that the part specifically referring to the respective cloud provider is limited to the provisioning of a Kubernetes cluster. Once you install Istio, the procedure is the same for all cloud providers. The manual steps required for this can be found in the Knative documentation on GitHub

For the automated method, Pivotal has released a framework called riff. We will run into this later on as well during development. riff is designed to simplify the development of Knative applications and support all core components. At the time this article is being written, it is available in version 0.2.0, assuming the use of a kubectl configured for the correct cluster. If you have this, you can use the command from Listing 4 to install Istio and Knative via the CLI.

>>riff system install

Warning! If you are installing Knative locally, the parameter –node-port needs to be added. After you run this command, the message riff system install completed successfully should appear within a few minutes.

Now we have our application environment with Kubernetes, Istio and Knative set up. Relatively fast and easy thanks to riff – in my opinion at least.

Development of a function: We now have a number of supported programming languages to develop a function that will then be hosted on Knative. A few sample projects can be found on the GitHub presenceof Knative. For my use case, I decided to use the Spring Cloud Function project. This specifically helps deliver business value as a function, while bringing all the benefits of the Spring universe with it (autoconfiguration, dependency injection, metrics, etc.).

As a first step, you add the Dependency described in Listing 4 to your pom.xml.

Listing 4: “spring-cloud-starter-function-web” dependency

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-function-web</artifactId> </dependency>

Once we have done that, we can write our little function. To make the Lambda function and the Spring function comparable, we will implement the same logic. Since our logic is very minimal, I will implement it myself in Spring Boat Application. Of course, you can also use the Dependency injection as you normally would with Spring. We use the java.util.function.Function class to implement the function (Listing 5). This will be returned as an object of our Hello method. It is important that the method with the @Bean annotation is used, otherwise the end point will not be released.

Listing 5: Implementation of our function

package de.developerpat.springKnative;

import java.util.function.Function;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

@SpringBootApplication

public class SpringKnativeApplication {

@Bean

public Function<String, String> hello(){

return value -> new StringBuilder("Input: " + value).toString();

}

public static void main(String[] args) {

SpringApplication.run(SpringKnativeApplication.class, args);

}

}

Knative Deployment: To make sure we can provide our function, we need to first initialize our namespace with riff. Here the user name and the Secret of our Docker registry are stored so that the image can be pushed when creating the function. Since we do not have to write the image ourselves, riff practically takes this over for us. For this purpose, a few CloudFoundry build packs are used. In my case, I use the Docker Hub as a Docker registry. Initialization can be run using the following command:

>>riff namespace init default --dockerhub $DOCKER_ID

If you want to initialize a namespace other than default, just change the label. But now, we want to deploy our function. Of course, we have checked everything into our GitHub repository and we now want to build this state and subsequently deploy it. Once riff has built our Maven project, a Docker image should be created and pushed into our Docker Hub repository. The following command takes care of this for us:

>>riff function create springknative --git-repo https://github.com/developerpat/spring-cloud-function.git --image developerpat/springknative:v1 –-verbose

If we want to do all that from a local path, we swap –git-repo with –local path and add the corresponding path. If you now take a look at how the command runs, you recognize that the project is analyzed, created with the correct build pack, and the finished Docker image is pushed and deployed at the end.

Calling the function: Now we would like to test – which is also relatively easy to do with AWS via the console – whether the call works against our function. We can do that very simply as follows thanks to our riff CLIs:

>>riff service invoke springknative --text -- -w '\n' -d Patrick curl http://localhost:32380/ -H 'Host: springknative.default.example.com' -H 'Content-Type: text/plain' -w '\n' -d Patrick Hello Patrick

Using the invoke command, you can generate and execute a curl as desired. As you can see now, our function works flawlessly.

Can Knative compete with an integrated Amazon Lambda?

Due to the fact that Knative is based on Kubernetes and Istio, some features are already available natively:

Kubernetes

- Scaling

- Redundancies

- Rolling out and back

- Health checks

- Service discovery

- Config and Secrets

- Resilience

Istio

- Logging

- Tracing

- Metrics

- Failover

- Circuit Breaker

- Traffic Flow

- Fault Injection

This range of functionality comes very close to the functional scope of a Function-as-a-Service solution like AWS Lambda. There is one shortcoming, however: There are no UIs to use the functionality. If you want to visualize the monitoring information in a dashboard, you need to set up a solution yourself for it (such as Prometheus). A few tutorials and support documents are available in the Knative documentation (/*TODO*/). With AWS Lambda, you get those UIs innately and you don’t need to worry about anything anymore.

Multi cloud capacity

All major cloud providers now offer a managed Kubernetes. Since Knative uses Kubernetes as the base environment, it is completely independent of the Kubernetes provider. So it’s no issue to migrate your applications from one environment to another in a very short amount of time. The biggest effort here is to change the target environment in the configuration during deployment. Based on these facts, simple migration and exit strategies can be developed.

Conclusion

Knative does not fall in line with all aspects of the FaaS manifest. So is it an FaaS at all then? From my personal point of view, by all means yes. For me, the most important item in the FaaS manifesto is that no machines, servers, VMs or containers can be visible in the programming model. This item is fulfilled by Knative in conjunction with the riff CLI.